Continuous deployment

with docker

@sublimino

Delivery Engineer

News UK, Visa, British Gas

www.binarysludge.com

codes

Containment

data

efficacy

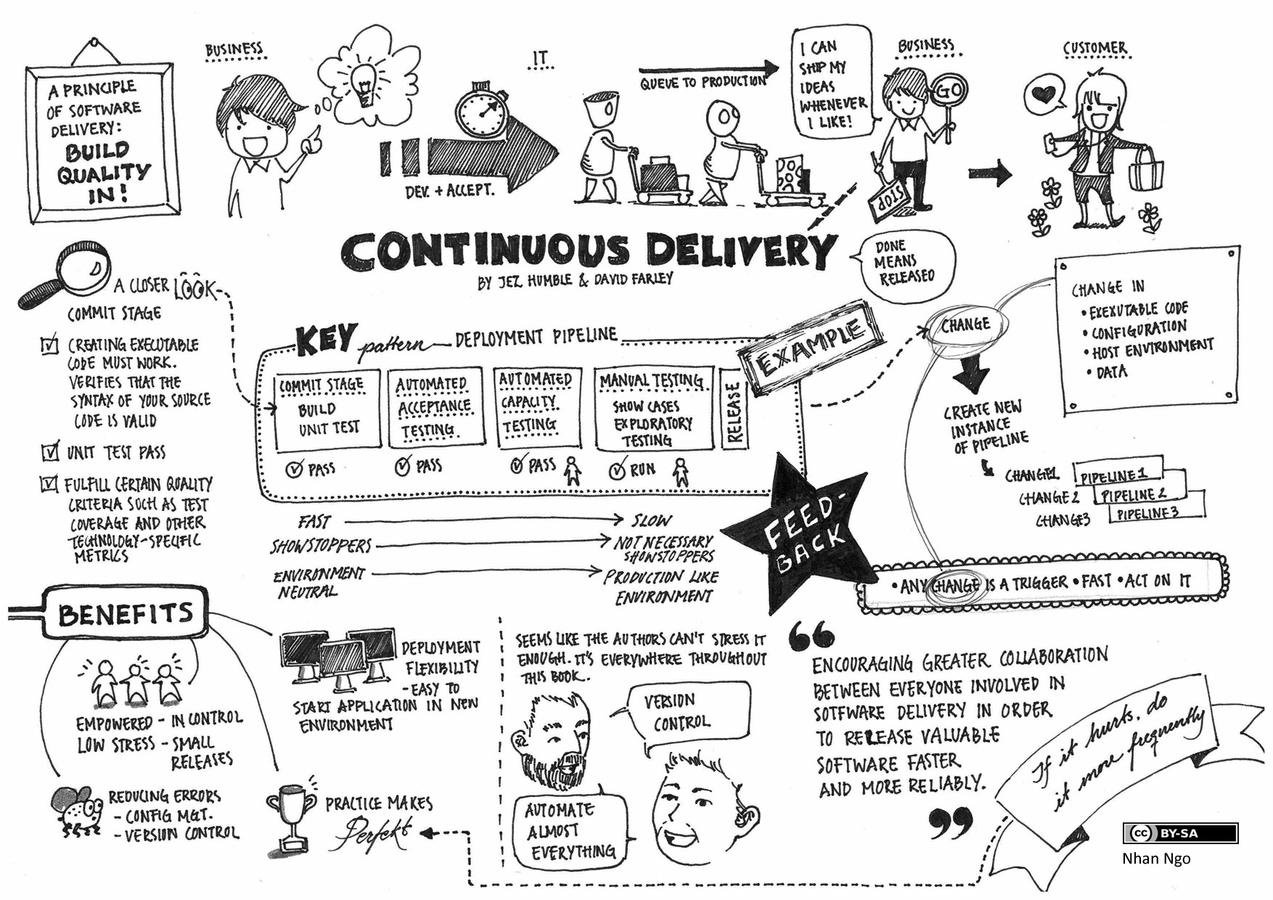

Principles

-

Partial or whole stack deployable on developers' machines

- Ease of full stack debugging

- Build server verifies tests rather than running them for the first time

- No staging environment, feature toggles instead

-

Devs can run system integration tests, but they're slow to boostrap

- System integration faster, incremental deployments

pick any two

more Principles

- Containers identical from dev through test to production

- Logic branched on environment variables

- Deterministic pipeline

- Constant cycle-time optimisation

Continuous delivery/deployment

- Always ready to deploy vs always deployed

-

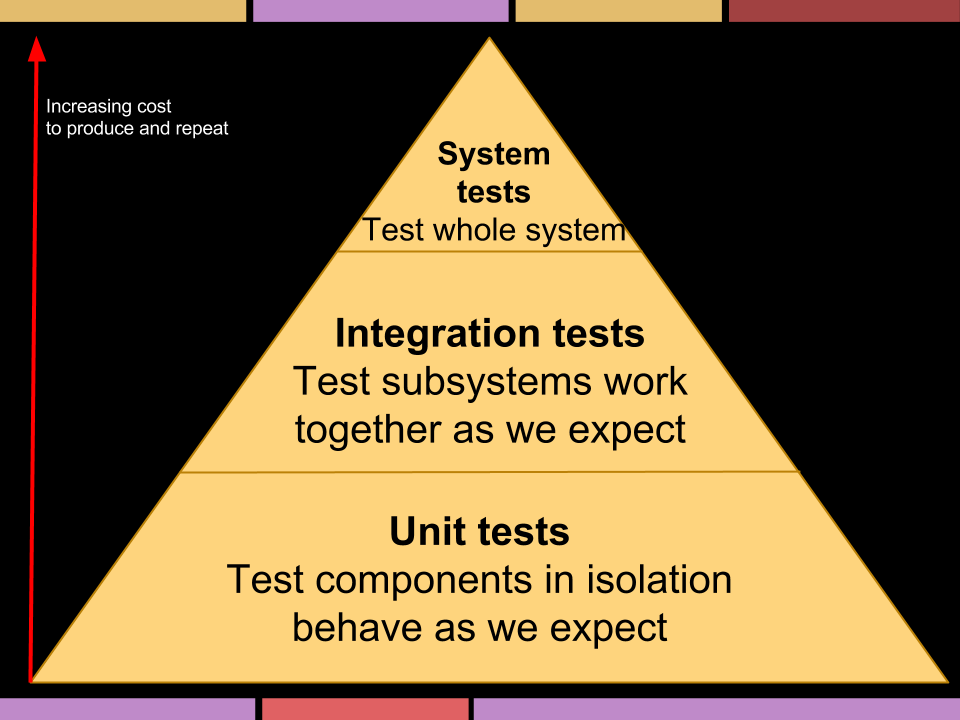

All code/containers must be testable

- Test teams should never be bigger than dev teams

- Humans are fallible, testers more so

-

Machines are all that will be left one day

BRING PAIN FORWARD

deliver continuously

developer Workflow

- Hack some code

- Run local tests

- Commit to build server

- Profit

On green deploy

- Push new code version to production alongside current

- Balance traffic over to new version

- Monitor cluster health, error rates and response times

- Run post-deployment acceptance tests against the cluster

- Revert to previous version if KPI tolerances exceeded

Implementation

-

Shared code repository

- npm module

- Present in every repository/image (even couchbase/redis)

- Contains linting rules, extensible task runner, read-only credentials

- Idempotent npm post-install task creates test directories and manipulates filesystem

- Any change triggers cascading builds in dependent jobs

Task runner

- Stream processing and parallel task execution

- Abstract invocation of language/library specificities

- Tight dev feedback loop, reduced cycle-time

Unified task interface

Abstracts docker, fleet and test commands$ gulp <tab>

browserify fleet:restart test:functional

build fleet:start test:functional:remote

build-properties help test:functional:watch

build:watch less test:integration

default lint test:integration:remote

docker:build livereload test:integration:watch

...-

4 different test runners, correct one inferred and invoked

-

Defers test execution to cross platform BASH scripts

- Simple CLI options abstracted, i.e. --xml

- BATS acceptance tested

- https://github.com/sstephenson/bats

- OSX vs Linux - some GNU-ish complexities

- Build server has no logic

dev to docker

- Component developed with tests

-

Developer builds Dockerfile for component

- FROM common base image

- ADD source files

- RUN installation of dependencies

testing, testing

Test setup script

- Global setup/teardown and test override hooks

- External services brought up with fig

-

Each repository's fig.yml configuration file:

- Defines the component's invocation

- ...and that of any immediate dependencies

fig.yml

- fig.yml of component under test is copied to fig-test.yml

- Dependent components' fig.yml files merged into fig-test.yml

- Brings in transitive dependencies

-

Environment variables are templated

- NODE_ENV

- Database names

- IPs and ports

- (single b2d VM or single build node)

fig on a build server

- Used to complain at the absence of a TTY

- Hacked around it...

export SCRIPT_COMMAND="$NOHUP_COMMAND script --quiet --command"

funky cross platform fixes required

export TARGET_FIG_FILE=${TARGET_FIG_FILE:-fig-test.yml}

export PROJECT_NAME=$(basename "$(pwd)" | sed 's/[^a-z]//g');

export FIG_COMMAND="fig --file=${TARGET_FIG_FILE} --project-name ${PROJECT_NAME}";

...

figUp() {

$SCRIPT_COMMAND $FIG_COMMAND up

}

...gnu gnu gnu

This is the price paid for cross platform compatibility

# linux defaults

export BASE64_COMMAND="base64 -w 0"

export NOHUP_COMMAND="nohup"

export SCRIPT_COMMAND="$NOHUP_COMMAND script --quiet --command"

# osx overrides

[[ $(uname) = 'Darwin' ]] && {

darwinScript() {

$NOHUP_COMMAND script -q typescript $(echo $1 \

| sed -e 's/$^"//g' -e 's/"$//g')

}

SCRIPT_COMMAND="darwinScript"

BASE64_COMMAND="base64"

[[ $(command -v reattach-to-user-namespace) ]] && {

NOHUP_COMMAND="reattach-to-user-namespace nohup"

}

}back to test Setup script

- HTTP health checks and /health endpoints are used to determine availability

- Sleep is avoided where possible

- Other necessary pre-test config

- API oAuth authentication

- Endpoints for exposing tunnels to Sauce labs

- JS/CSS build chains

docker image tests

- Tests are run against a container's public interface - i.e. exposed ports

- Database fixtures in a separate image, invoked using links

- On build server test completion, image is tagged

- Tagged image pushed to private docker registry

Promotion

- Once the component's tests are passed, system integration tests occur

- These ensure the interfaces between components match

- On completion, non-functional tests are run in an isolated environment

- If all pass, the component is marked for deployment

Deployment

- Orchestration still nascent

-

Current design informed by Kubernetes

-

in lieu of 1.0 we've implemented a stripped-down feature set

- Service discovery via etcd

- HAProxy routing via confd

- Rolling updates

- Added cluster immune system and rollback

-

in lieu of 1.0 we've implemented a stripped-down feature set

In conclusion

-

Continuous deployment

- Start with tests

- Build a deterministic pipeline

- Deploy to production

- Monitor everything

- Use KPIs for a cluster immune system

- Roll back if KPIs exceed tolerances

- Halt the pipeline, analysis/5 whys?

- Write a failing test case before fixing it

- Re-enable the pipeline

- Sleep well at night

fin

@sublimino

www.binarysludge.com